Data bias in AI

Artificial intelligence can sometimes be biased to certain shapes or colours. When such AI systems are applied to situations that involve people, then this bias can manifest itself as bias against skin colour or gender. This lesson explores bias in AI, where it comes from and what can be done to prevent it.

Additional details

| Year band(s) | 5-6, 7-8 |

|---|---|

| Content type | Lesson ideas |

| Format | Web page |

| Core and overarching concepts | Digital systems, Data representation, Specification (decomposing problems), Impact and interactions |

| Australian Curriculum Digital Technologies code(s) |

AC9TDI6K01

Investigate the main internal components of common digital systems and their function

AC9TDI6K02

Examine how digital systems form networks to transmit data

AC9TDI8K02

Investigate how data is transmitted and secured in wired and wireless networks including the internet

AC9TDI6K03

Explain how digital systems represent all data using numbers

AC9TDI8K04

Explain how and why digital systems represent integers in binary

AC9TDI6P01

Define problems with given or co developed design criteria and by creating user stories

AC9TDI8P04

Define and decompose real-world problems with design criteria and by creating user stories

AC9TDI6P06

Evaluate existing and student solutions against the design criteria and user stories and their broader community impact

AC9TDI8P10

Evaluate existing and student solutions against the design criteria, user stories and possible future impact |

| Technologies & Programming Languages | Artificial Intelligence |

| Keywords | Artificial Intelligence, AI, artificial, intelligence, teachable machine, smart phone, algorithms, problem solving, digital systems, Scratch, Lesson idea, Lesson plan, Digital Technologies Institute, data bias |

| Organisation | ESA |

| Copyright | Creative Commons Attribution 4.0, unless otherwise indicated. |

Related resources

-

Home/School communications

In this lesson sequence, students use big data sets and school surveys, to design (and as an extension activity, make) a new digital communication solution for the school.

-

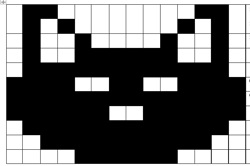

Using binary to create on/off pictures

In this sequence of lessons students develop an understanding of how computers store and send digital images and they are able to represent images in a digital format.

-

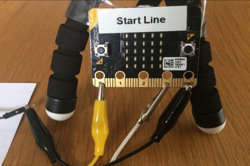

Creating a digital start line and finish line with micro:bits (Years 7-8)

The following activity suggests one-way Digital Technologies could be integrated into a unit where vehicles are being designed and produced.

-

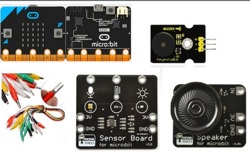

Networking with the micro:bit

This downloadable free book presents a series of activities to teach the basics of computer networks. While you may not learn all aspects of computer networking, these activities provide a useful selection and serve as a good starting point to cater for your student's needs, skill and knowledge.

-

DIY micro:bit metal detector (Years 5-6)

This activity shows one way to incorporate Digital Technologies into a goldfields unit in an authentic way using a micro:bit.

-

Classroom ideas: Micro:bit Environmental Measurement (visual programming) (Years 5-6)

This tutorial shows the coding needed for digital solutions of some environmental issues that can be created using pseudocode and visual programming.

-

Classroom ideas: Micro:bit Environmental Measurement (visual and general-purpose programming) (Years 5-8)

Investigating environmental data with Micro:bits: This tutorial shows the coding needed for digital solutions of some environmental issues that can be created using pseudocode and visual programming.

-

Home automation: General purpose programming

Investigate home automation systems, including those powered by artificial intelligence (AI) with speech recognition capability.